Calculating PSD from a Time-history File

June 25, 2019

Back to: Random Testing

Vibration Research software uses Welch’s method for power spectral density (PSD) estimation. This method applies the fast Fourier transform (FFT) algorithm to the estimation of power spectra.

In regards to his method, Peter D. Welch said, “[the] principal advantages of this method are a reduction in the number of computations and in required core storage, and convenient application in nonstationarity tests.”1

The process begins with Gaussian-distributed time-domain input data—i.e., a time-history file.

Summary: Calculating PSD from a Time-history File

- Partition data into frames of equal length in time

- Transform each frame into the frequency domain using the FFT

- Covert frequency-domain data to power by taking the squared magnitude (power value) of each frequency point

- Average the squared magnitudes of each frame

- Divide the average by the sample rate to normalize the PSD to a single hertz (Hz) bandwidth

A few additional factors affect the final PSD, including windowing and overlapping. Let’s look at each step in more detail.

Moving to the Frequency Domain

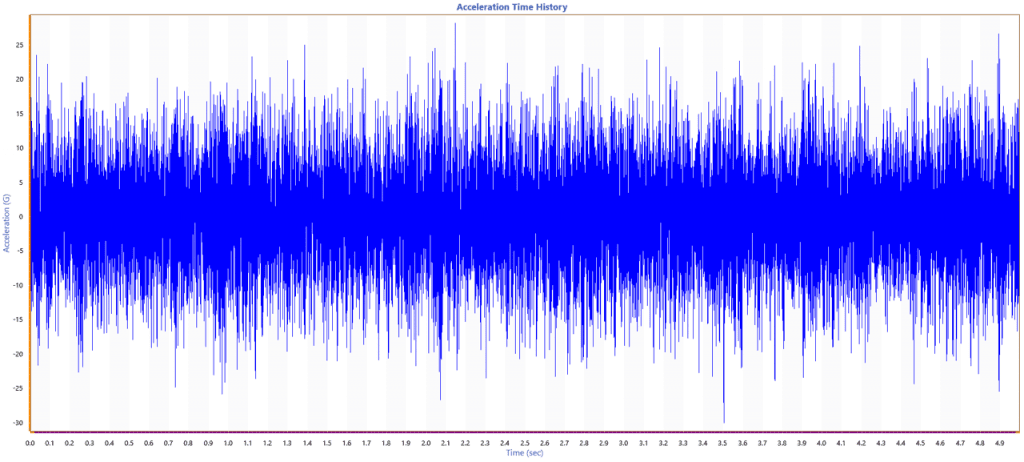

Figure 2.8 shows five seconds of Gaussian-distributed random vibration data in a time-history file. It is difficult to extract any meaningful information from the graph other than the peak acceleration which appears to be around -30G.

Figure 2.8. A five-second time-history graph.

To extract useful information from the vibration data, we must view it in the frequency domain. Moving to the frequency domain requires two calculations: the FFT and PSD.

Fast Fourier Transform (FFT)

The FFT transforms data into an acceleration versus frequency plot. The transform does not use nor require windowing, averaging, or normalizing functions.

Engineers often use the FFT graph to monitor the frequency spectrum. They can focus on changes in the frequency spectrum while viewing live data or analyzing a time-history file. However, to view energy distribution across the frequency spectrum, we must calculate the PSD from the FFT.

Calculating the PSD from a Time-history File

The steps to calculating PSD are as follows:

1. Divide the time-history file into frames of equal time length

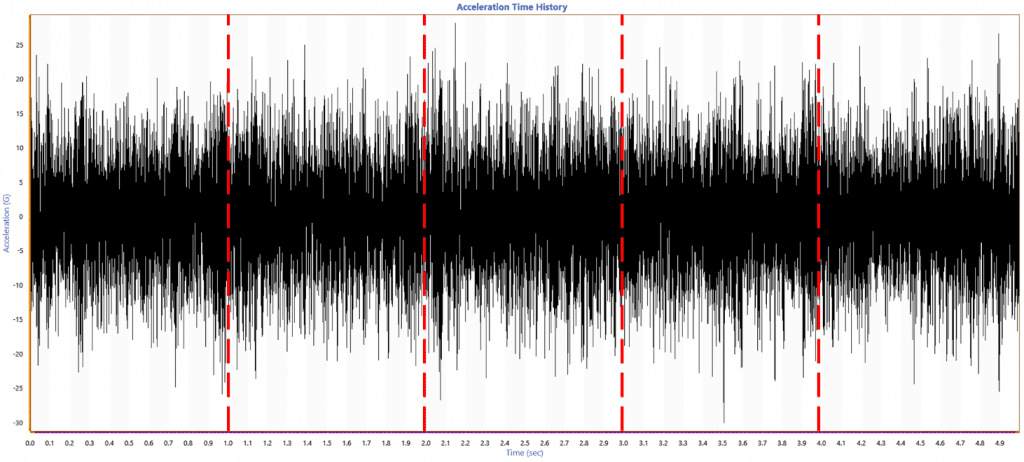

First, the time-history file should be divided into frames of equal time length. The lines of resolution and sample rate determine the width of each frame. There are two samples per analysis line.

In Figure 2.9, the recorded time history was sampled at 8,192Hz and 4,096 lines of resolution. The resulting frame width was one second. If the lines of resolution were changed to 1,024, the frame width would be 0.25 seconds.

Using a sample rate that is an exponential of 2 (2n) usually results in a PSD with lines spaced at a convenient interval. This is important to remember when selecting an initial sample rate.

Figure 2.9. A time-history graph divided into equal frames.

Note: for more information on adjusting lines of resolution, consider reviewing the following resource: Adjusting Random Lines of Resolution technical note.

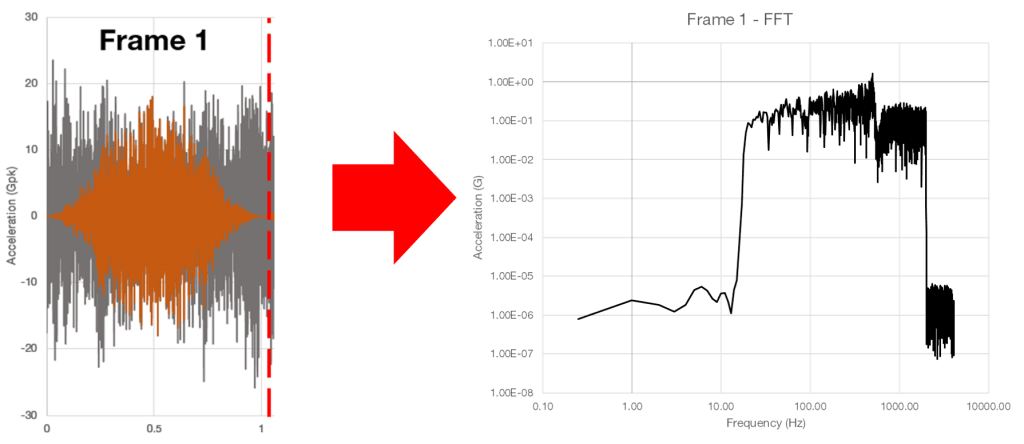

2. Calculate the FTT for each frame after applying a window function

The FFT assumes data are an infinite series. Therefore, it interprets the starting and ending points of each frame as if they are next to one another. Normally, random data are not an infinite series, so we must apply a window function before calculating the FTT.

Without a window function, the starting and ending points may be different and result in a transient spike between the two points. The transient spike would show up in the FFT as high-frequency energy.

Discontinuities

The sharp transition between starting and ending points is a discontinuity. These discontinuities are called spectral leakage and are reflected in the FFT calculation. Applying a window function removes the emphasis on the discontinuities and reduces the spectral leakage.

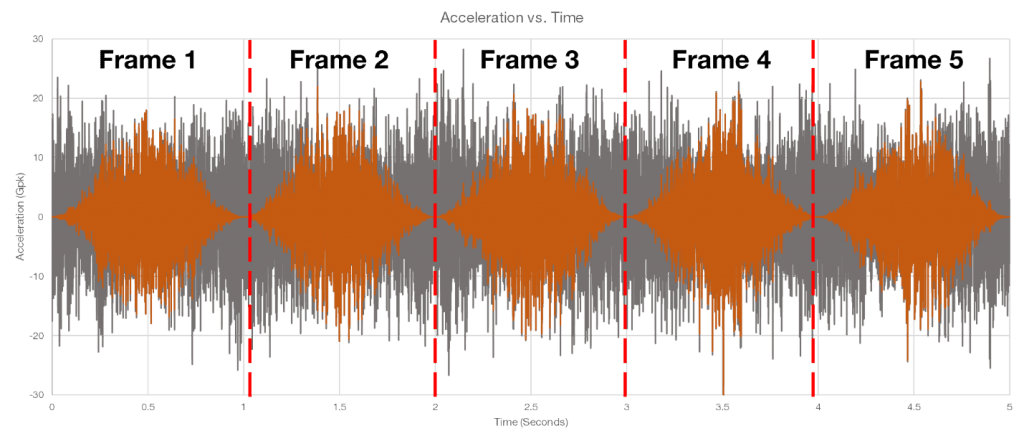

Ideally, data would have identical starting and ending points in every frame of data. As this is not the case, we must minimize any discontinuity by using a window function (Figure 2.10).

Figure 2.10. Applying a window function on each frame to reduce spectral leakage.

Windowing

As there is more than one window function, we must determine which one is most suitable for the application. A window function is evaluated by two components: the side lobe and the main lobe.

Figure 2.10 displays a Hanning window function. The grey waveform is the original waveform and the orange waveform is the windowed data. The Hanning function has a high and wide main lobe and side lobes at nearly zero. There is little to no discontinuity between the starting and ending points, which results in accurate frequency measurement.

The Hanning and Blackman functions are the most common window functions in vibration testing. Both functions have a good frequency resolution, minimal discontinuity, and, therefore, minimal leakage. Other window functions may be appropriate for other applications.

For a comprehensive list of common (and not-so-common) window functions, view the Table of Window Functions Details.

The transform uses the windowed data to calculate the FFT for each frame, thereby transforming the signal from the time domain into the frequency domain (see Figure 2.11.) This linear transformation gives us the ability to observe the frequency content of the time-history waveform.

Figure 2.11. Calculating the FFT for each frame.

FFT and Signal Analysis

The FFT is widely used in signal processing and analysis. Most importantly, it displays the frequencies in a waveform and their proportions. Engineers can use this information to determine many things, including:

- Frequency excitation at a defined period

- Peak acceleration of each frequency in a windowed frame of data

- Distribution of peaks

- Harmonic content

We can also apply weighting factors to an FFT. If our only goal was to generate an FFT, we might apply a weighting factor to ensure 1Gpk of the time domain data is equal to 1Gpk in the FFT. When the goal is to generate a PSD, we would normalize the window function to preserve the input power.

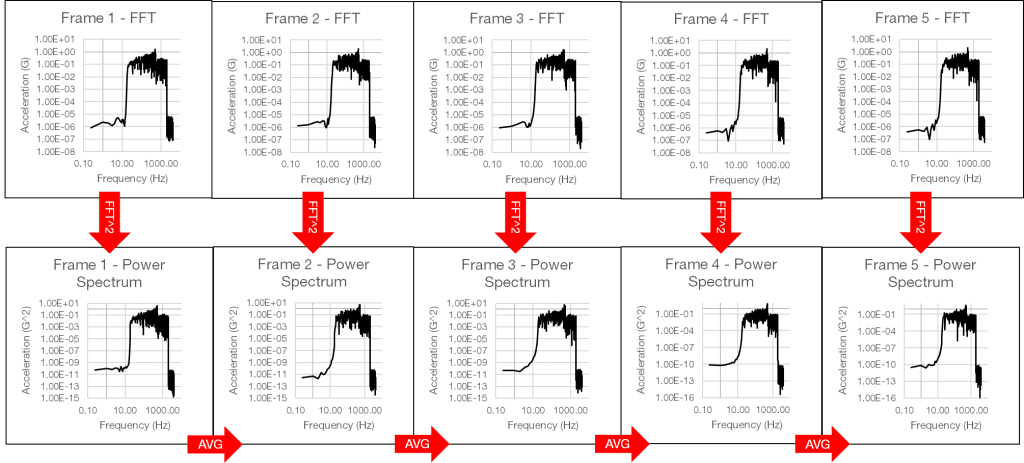

3. Square the individual FFTs for each frame and find an average

Next, square the individual FFTs for each frame and find the average squared amplitude (Figure 2.12).

Figure 2.12. Squaring the individual FFT and finding the average squared amplitude.

The PSD displays the average energy at a single frequency over a period of time. Initially, it will have a variance or “hashiness.” As the average collects more frames of similar data, the overall variance will decrease, the accuracy will increase, and the PSD will appear much smoother.

The total amount of time included in a PSD is related to the averaging parameter called degrees-of-freedom (DOF). The more frames of data that are averaged together, the higher the DOF.

Simply put, more FFTs will result in a better PSD. However, large numbers of FFTs require more time to collect and calculate.

Note: for more information on degrees of freedom, consider reviewing the following resources:

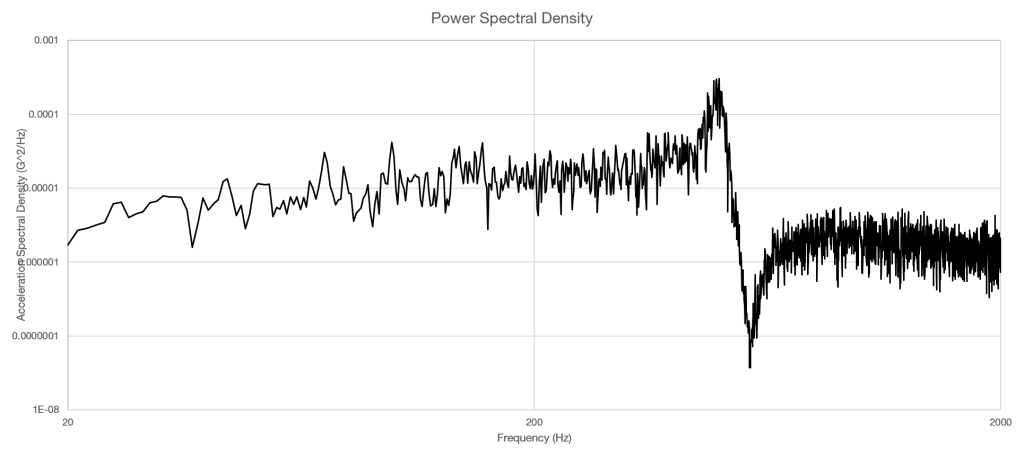

4. Normalize the calculation to a single hertz

Finally, the algorithm takes the average squared FFT and normalizes it to a single hertz, and a PSD is determined (Figure 2.13). For acceleration, the resulting unit is G2/Hz.

Figure 2.13. The resulting power spectral density (PSD).

Using the PSD, the response of the device under test is clear. As more frames of data are added to the PSD, the variance will continue to decrease and the PSD will become smoother.

For a more in-depth look at variance and methods of PSD smoothing, watch the webinar on Instant Degrees of Freedom. iDOF® is a feature from Vibration Research that quickly and effectively reduces the variance and creates a smooth PSD. This is the only mathematically justified method for displaying a smooth PSD trace in a short period of time.

Additional Parameters to Consider

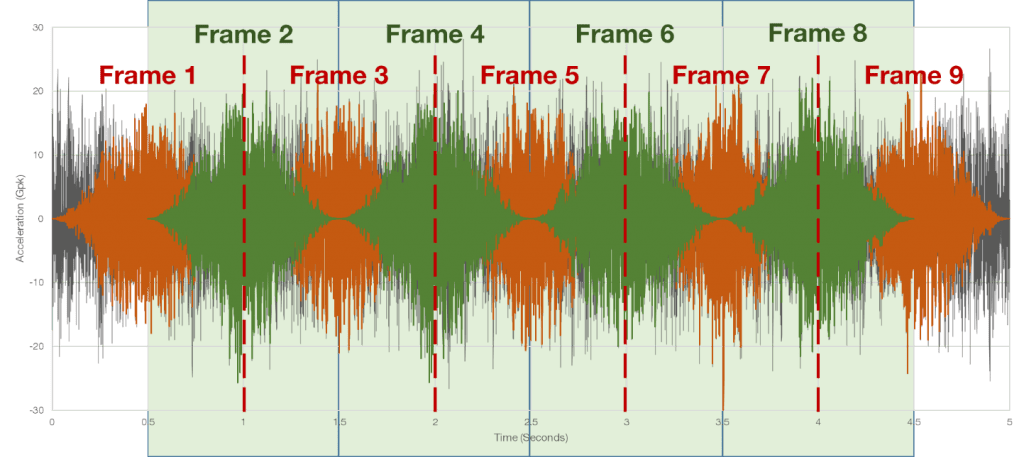

Overlapping

Overlapping is an additional technique that engineers often use during PSD calculation, although it is not required. It includes more of the original data in the PSD and generates more degrees of freedom within a defined period.

With a 0% overlap, each frame of data is completely separated. When frames are overlapped, some data in each frame are not accounted for due to the applied window functions. For each frame of data in the PSD, two degrees of freedom are calculated for the total average.

Example

If we create a PSD with:

- 0% overlap

- 120 DOF

- 8,192Hz sample rate

- 4,096 lines of resolution

- Hanning window function (1-second frames)

We will need to average 60 seconds worth of data to achieve 120 degrees of freedom.

With a 50% overlap, there would be 0.5 seconds between the start of each frame, but each frame would still be 1 second in length. Frames do not result in 2 DOF per FFT when they are overlapped. A 50% overlap would result in around 1.85 DOF per FFT; a 75% overlap would result in around 1.2 DOF per FFT.

Therefore, for a 50% overlap, 120 DOF, 8,192Hz, 4,096 lines of resolution, and a Hanning window PSD, we can achieve the desired PSD in 64.8 frames in 32.4 seconds.

Figure 2.14. Overlapping windowed frames.

In the original example with 0% overlap, there were five frames of data, resulting in 5 FFTs. With the 50% overlap shown in Figure 2.14, the same section of data results in 9 FFTs.

Lines of Resolution

The last parameter to consider in the PSD calculation is the lines of resolution. Along with the sample rate, the lines of resolution value determines how far apart each analysis point is spaced on the PSD. A higher value will result in a more accurate PSD but requires a larger number of samples.

Many test standards require a specific lines of resolution value for a resonance to properly display the peak. If too few lines are used in a PSD, the result is similar to under-sampling a waveform: the distance between analysis points will be too great and the gap between will not be appropriately accounted for. A minimum requirement of three or more lines of resolution is needed to properly resolve a resonance.